Editor’s note: Forecast is a social prediction market: an exchange where participants use points to trade on the likelihood of future events. Community members pose questions about the future, make predictions, and contribute to discussions leveraging their collective knowledge. Download the app to join the community.

In this series, we’ll delve into how we think about content moderation in Forecast today, and where we’re trying to get. In this post, we’ll give a little background on Forecast’s current approach to moderation and what makes the problem hard. Next time, we’ll share a few ‘lessons from the trenches’ of prediction market content moderation.

Since we launched Forecast, we’ve known that we want to move eventually toward a community-driven model for content moderation. Community moderation has significant upside. In addition to being scalable, it empowers active members--rather than Forecast developers--to define the values and norms for the group. The way we envision it, community-driven moderation can also promote greater accountability: if anyone can become a moderator and all discussion and decisions are public and appealable, perhaps that can help people trust in both the actions and information within the community. This principle is what motivated us to make all conversation and forecasts in the app public, and also to release the Forecast Data Stream.

But community moderation--and content moderation on the internet more generally--is a deeply fraught topic. Through the history of the internet, there are countless examples where even thoughtful moderation models have gone astray, due to issues as mundane as human error and as essential as our collective values around speech.

This series is not intended as a discourse on the challenges companies (and societies) face in moderating who can say what. Instead, we want to shed some light on where Forecast is in its winding journey toward helping people work together to (we hope) improve the quality and tenor of information on the internet.

How do we think about moderating questions and content?

Forecast is currently moderated by us and several other members of the Forecast team while we test and build our way toward handing responsibilities over to the community. Our main goal, in moderating Forecast is to make the questions and details so clear that Forecasters can spend all their time discussing whether the events in the questions will happen, rather than what the questions themselves mean.

While members of the community currently cannot launch, edit or settle questions (or moderate comments), they provide feedback in several forms:

In the app:

People can see all submitted questions before they launch, upvote their favorites and provide feedback on the question phrasing and terms.

People can report any live questions question as overly vague, ready to settle or ‘other issue’

In the beta tester Facebook group: People can provide longer form feedback and discuss with us and other forecasters.

You might be wondering: if these feedback channels are working, why don’t we just let them do the rest of the work (launching, settling, editing)? The short answer, which we’ll go into much more detail on next time, is that moderating the user submitted questions in Forecast is really hard. Question specificity and accuracy, each of which requires sometimes extensive pre-launch research, are the foundation of the app. If a question can’t be settled (meaning no one can definitively say whether the event happened or didn’t), then the market game at the heart of Forecast grinds to a halt. In order to retain our community’s trust (and engagement), we need to not only ensure that questions are settle-able, but also that they get settled in a timely manner and updated when forecasters need clarification.

That, as it turns out, is easier said than done.

Show me

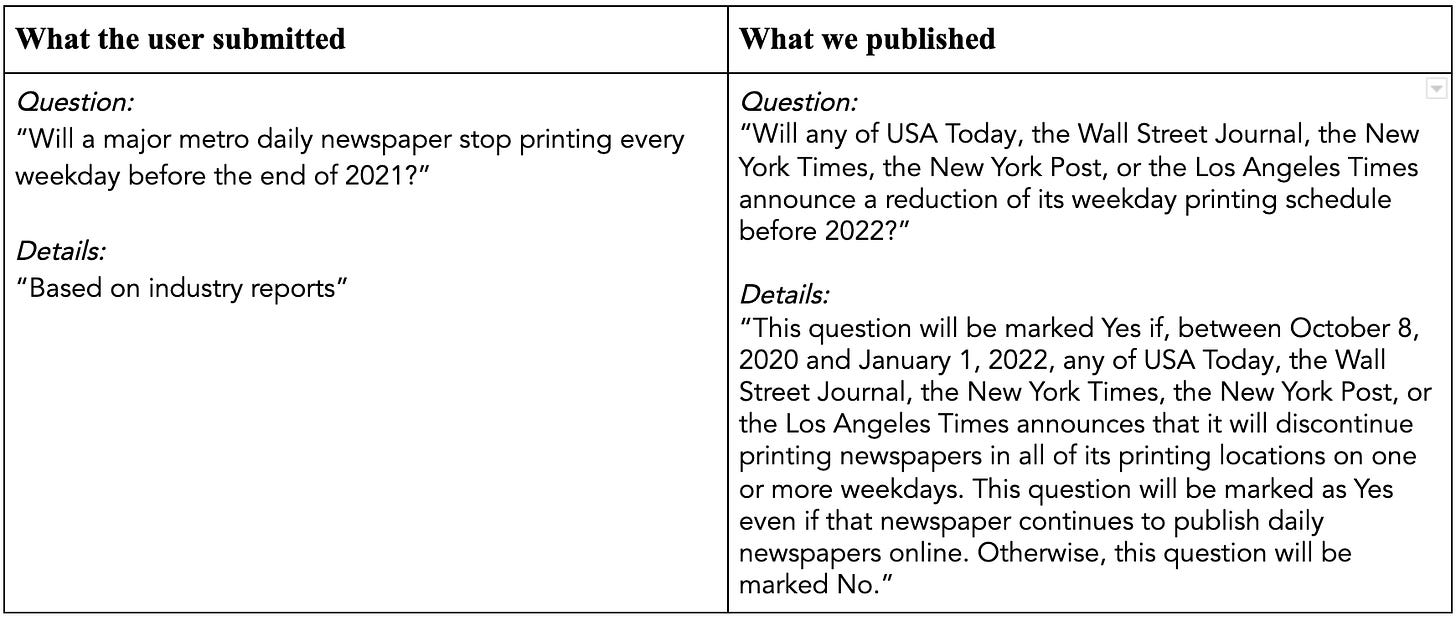

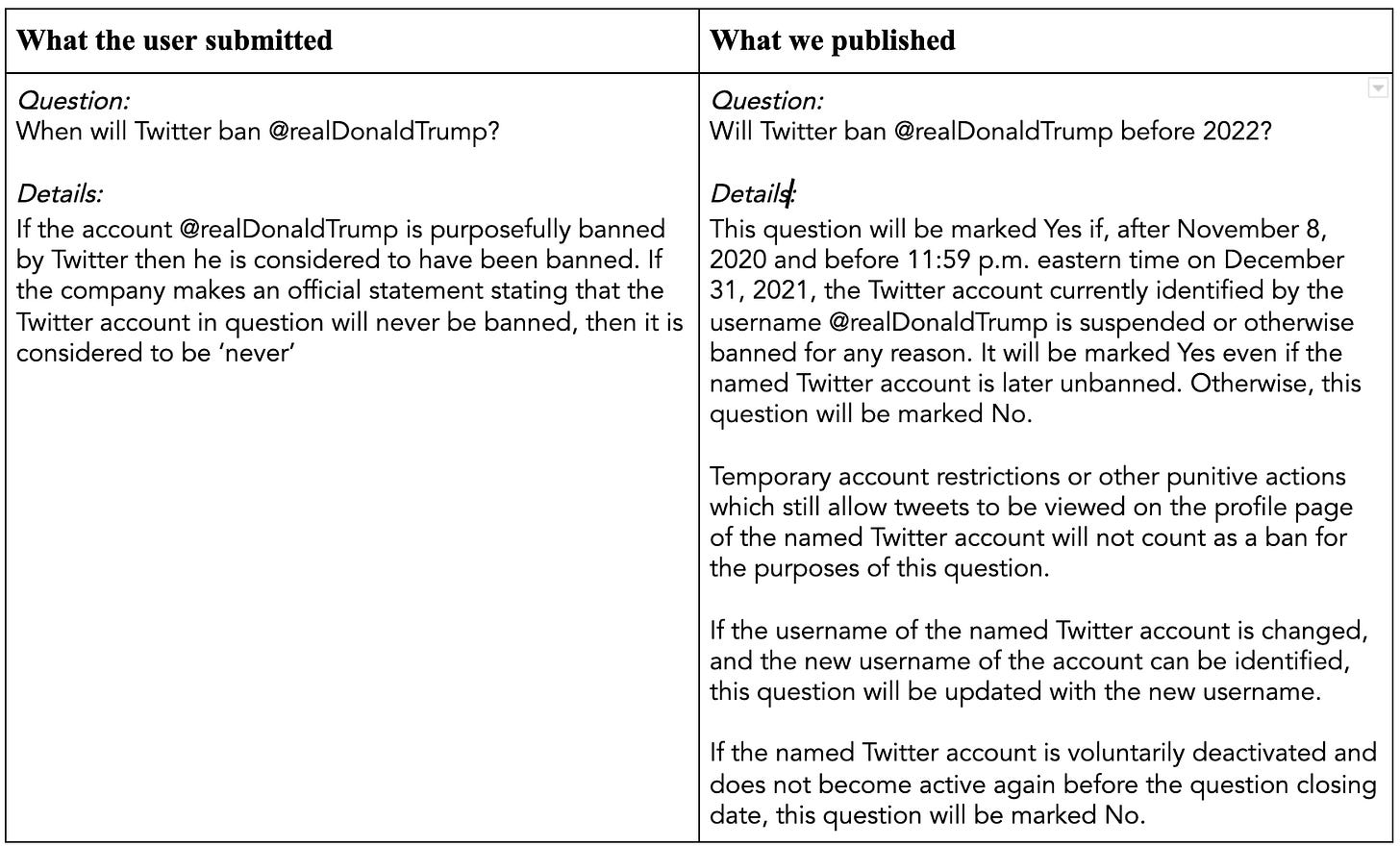

Let’s take a look at a few examples of questions submitted to Forecast, along with what we ended up publishing. While we make an effort to launch all questions post-editing, questions must abide by our guidelines and Facebook’s community standards.

As you can see, there’s a pretty big difference between what each user initially submitted and what got published. While we try to capture the submitter’s intent in the final question (this is one of the functions of the pre-launch comment period), this is why all questions in Forecast are ‘inspired by’, rather than ‘asked by’ their submitters.

Question moderation has been a learning process for our team. When we started Forecast, we knew that we would need edit questions for clarity, but we weren’t yet aware of all of the pitfalls of writing a vague, unsettle-able question—and we weren’t expecting our eagle-eyed users to be quite so vocal when we go it wrong! As our community has grown, we continue to rely on them to play a growing role in content moderation.

What’s next

In part 2 of our ongoing series on moderation in Forecast, we’ll go into some lessons learned from the past few months of moderating Forecast. Spoiler alert: it gets messy.